Machine learning and artificial intelligence (AI) solutions are increasingly pervasive in modern society. Cloud-based smart AI assistants are revolutionizing the way we work, learn, and communicate. At the same time, advances in AI at the edge are unlocking unprecedented capabilities in robotics, smart appliances, autonomous vehicles, and wearable devices. However, these AI training and inference tasks impose substantial computational, energy, memory, and carbon footprints. Over the past decade, the scale of these workloads has moreover grown at an extraordinary pace, surpassing even the projections of Moore's law. As a result, fundamental hardware and architecture research is required to sustain AI's transformative impact. At MICAS, our research team has spent the last decade addressing these challenges by exploring improved hardware architectures, advanced chip implementations, and hardware-algorithm co-optimization techniques for hardware-efficient AI solutions.

Enabling powerful ML algorithms in a constrained memory, latency, energy and/or carbon budget comes with several exciting challenges. Execution efficiency can be obtained by customizing processor architectures to the models of interest. Yet, the speed at which new models emerge, impede such tight co-optimization, and require the hardware platforms to be flexible towards future developments. The challenge is hence to strike the right balance between customization and flexibility. Our MICAS team continued to work on several innovations towards this goal.

New processor architectures have to be developed to accelerate the targeted workloads. Existing CPU's and GPU's fail to achieve sufficient efficiency. New NPU (neural processing units), TPU (tensor processing units) or IMC (in-memory computing) designs are developed, and offer significant speed ups. Yet, we are at a point where single core solutions no longer suffice. New multi-accelerator systems have to be explored.

Our vision to achieve efficient execution, for a multitude of diverse ML workloads, is to combine different accelerator cores in heterogeneous multi-core processing platforms. The Diana platform, taped out in 2021, was the first heterogeneous multicore system developed in our lab – combining a RICV-V CPU, a digital AI accelerator and an analog-in-memory AI accelerator. In 2023, we continued with the design of various AI accelerators for bit-sparse DNN inference and for evaluating emerging probabilitsic graphical models. Since 2024, we focus our efforts on a RISC-V based processor architeture template, denoted as "SNAX", enabling the easy integration of a wide variety of ML-accelerators in a RISC-V framework.

In parallel, we are developing integrated compile flows, which allow to smoothly customize for heterogeneous platforms consisting of a diverse mix of accelerators. A first flow based on TVM, call "HTVM", has been rolled out and been deployed for the Diana and GAP9 chips. Currently, the flow is migrated to MLIR, to enabling increased flexibility and customization for multi-accelerator SNAX platforms.

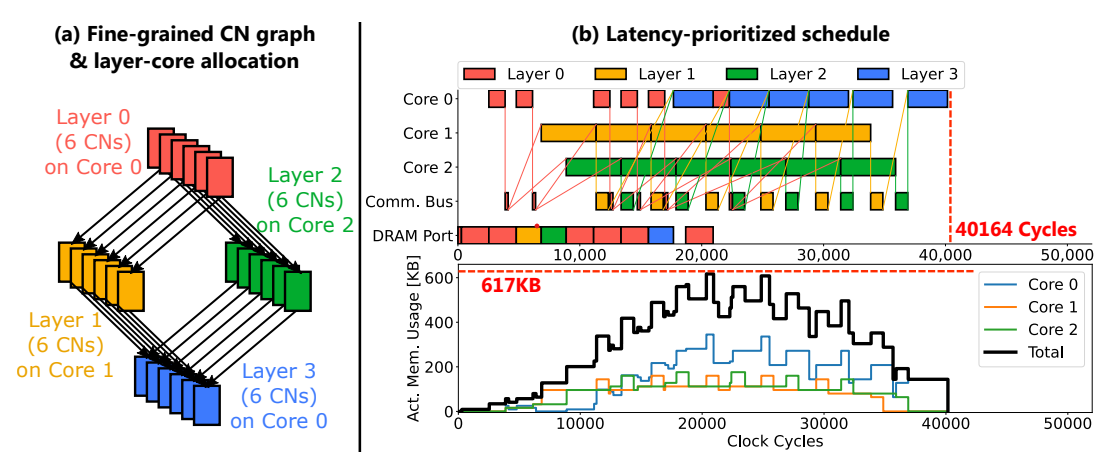

The degrees of freedom in designing such ML accelerators are very large. It is time-wise impossible to develop each of them at RTL level to assess their relative performance. When migrating from single-core to multi-accelerator heterogeneous systems, the design space as well as the scheduling or mapping space again increases drastically. Moreover, the optimal hardware architecture is tightly interwoven with the optimal execution schedule when mapping different workloads on the hardware, requiring co-optimization. To enable this, a rapid modeling and design/scheduling space exploration (DSE) frameworks are developed at MICAS, called ZigZag (for single core) and Stream (for multi-core). ZigZag and Stream are available open source, and is continuously expanded by our team. In 2024, our tool suite was extended with an extension for Large Language Models (ZigZag-LLM), a carbon estimation model (in main ZigZag branch), as well as a stochastic framework for sparse AI processors.

All frameworks are available fully open-source on github, using the links in the text above.

While neural networks continue to thrive, it becomes more and more clear that we will need a broader variety of models for the capable, secure, reliable, efficient AI models of the future. Neural networks excel at handling complex, high-dimensional data, offering scalability and flexibility for diverse applications. However, they often struggle with interpretability and uncertainty handling. Probabilistic models address this gap by incorporating robustness to uncertainty and providing confidence measures, but they can be computationally intensive. Symbolic reasoning, on the other hand, brings interpretability, and allows to insert expert knowledge through structured logic and rules, which are essential for tasks requiring transparency and explicit decision-making.

However, current hardware architectures are optimized for the mainstream neural network execution, while probabilistic or symbolic reasoning algorothms do not map well on existing CPU, GPU or TPU platforms. At MICAS, we are actively researching computer architectures for these novel workloads, aiming at one platform which can support hybrid mixes of these different workloads, blending high-throughput matrix operations with sparse computations, stochastic processes, and graph-based reasoning. These demands call for innovative architectures that combine specialized accelerators, memory hierarchies, and co-optimized software to handle the diverse and dynamic requirements of this next generation of AI.

Current research topics

Current research topics